### Beyond the Algorithm: Interpreting the New Wave of AI-Powered Medical Device Approvals

The drumbeat of innovation in AI-powered medical technology is growing louder. It’s no longer a question of *if* AI will transform healthcare, but *how* fast and under what governance. For those of us working deep in the code, the most telling indicators aren’t just the research papers or funding rounds, but the quiet, deliberate pace of regulatory bodies. Recent approvals and submissions from companies like Medtronic and Kelyniam offer a crucial snapshot—not just of individual products, but of the maturing relationship between advanced algorithms and the stringent demands of patient safety.

These regulatory milestones are more than just bureaucratic checkboxes; they are the translation of complex computational models into a language that regulators can validate, trust, and ultimately, approve for clinical use.

—

#### The Evolving Blueprint for Approval

When we look at the recent slate of approvals, two key technical and strategic patterns emerge. They provide a blueprint for any organization looking to navigate the challenging path from a promising model to a market-ready medical device.

**1. The Primacy of the “Locked” Algorithm and Robust Validation**

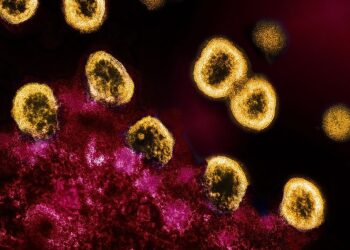

A significant portion of the AI/ML-enabled devices receiving clearance today, from diagnostic imaging tools to surgical planning software, are based on “locked” algorithms. This means the model’s performance is fixed based on its training data; it does not continuously learn or change after it has been deployed.

For regulators, this is a matter of predictability and control. A locked model can be rigorously tested against a specific validation dataset, and its performance characteristics—its sensitivity, specificity, and potential biases—can be clearly defined and documented. The regulatory success of a major player like Medtronic with its AI-driven platforms often hinges on this principle. They can demonstrate, through extensive clinical trials, that the algorithm performs consistently and safely within a well-understood set of parameters.

The technical challenge here is immense. The burden of proof falls on the quality and diversity of the training and validation data. Was the dataset representative of the intended patient population? Were potential demographic biases identified and mitigated? The approval is not just for the algorithm itself, but for the entire data-driven development and validation process that produced it.

**2. The Viability of Niche, High-Impact Applications**

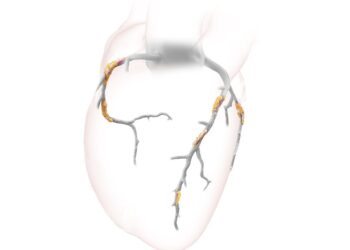

While giants like Medtronic command headlines, the regulatory progress of smaller, more specialized companies like Kelyniam is equally significant. Kelyniam’s focus on patient-specific cranial and facial implants highlights a different facet of AI’s integration into medicine. Here, AI is not a mass-market diagnostic tool but a critical component in a highly personalized manufacturing workflow, likely translating patient-specific CT scans into precise implant designs.

This demonstrates that the regulatory pathways are not solely built for large-scale, data-intensive diagnostic systems. They are also adapting to accommodate “Software *as a* Medical Device” (SaMD) that serves a more bespoke, but no less critical, function. For developers in this space, the regulatory question shifts from “How does your model perform on 100,000 images?” to “Can you prove your AI-driven design process reliably and repeatedly produces a safe and effective device for each unique patient?” This is a subtle but profound distinction, focusing on process integrity as much as on pure algorithmic performance.

—

#### The Road Ahead: From Validation to Vigilance

The current regulatory landscape, as illuminated by these recent decisions, is one of cautious, evidence-based progress. The focus is on de-risking AI by insisting on transparency, rigorous validation, and predictable performance. This is a necessary and responsible phase in the adoption of any powerful new technology in a high-stakes field like medicine.

However, the true potential of AI lies in its ability to adapt and learn. The next great regulatory frontier will be creating frameworks for “adaptive” or continuously learning algorithms. How will we monitor a model that evolves in the field? What new post-market surveillance techniques will be required?

The approvals we see today are the foundation. They are building trust and establishing the operational muscle—both within companies and at regulatory agencies—to handle this next generation of intelligent medical technology. For now, each approval is a hard-won victory that proves the same thing: a brilliant algorithm is only the beginning. The real work lies in proving its safety, efficacy, and readiness for the most important real-world application of all—patient care.

This post is based on the original article at https://www.bioworld.com/articles/724125-regulatory-actions-for-sept-19-2025.